Show HN: Mrkff – An Information Overload Management Tool

4 by 15charslong | 2 comments on Hacker News.

Filed under: Celebrities,Green,Aston Martin,Crossover,Performance,Classics

Prince Charles visits Aston Martin with his Aston, helps build a DBX, draws tabloid ire originally appeared on Autoblog on Fri, 21 Feb 2020 13:20:00 EST. Please see our terms for use of feeds.

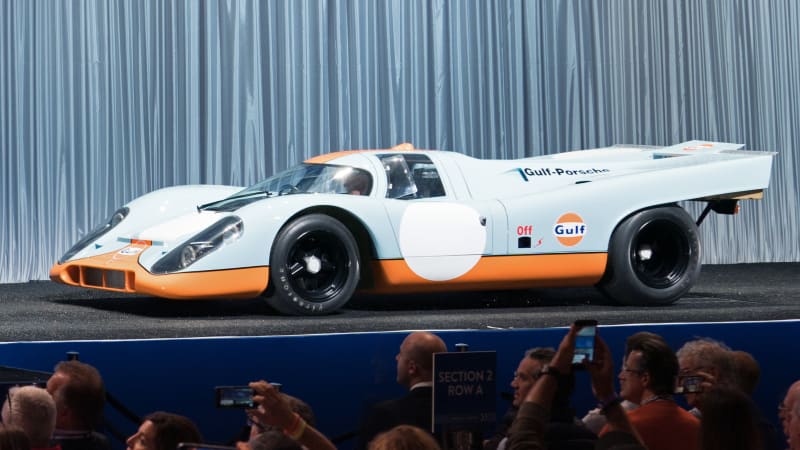

Permalink | Email this | CommentsFiled under: Celebrities,TV/Movies,Porsche,Luxury,Racing,Performance

Continue reading These are the 10 coolest movie Porsches of all time

These are the 10 coolest movie Porsches of all time originally appeared on Autoblog on Thu, 20 Feb 2020 12:00:00 EST. Please see our terms for use of feeds.

Permalink | Email this | CommentsFiled under: Celebrities,Green,Weird Car News,Porsche,Tesla

Elon Musk is not one to mince words, but he may have just lost a potential customer because of a cutting tweet. Gates and Brownlee have met before, and the idea was to have Gates discuss some of what the Bill & Melinda Gates Foundation has planned for this year, which marks the 20th anniversary of the organization. Unsurprisingly, the conversation touched on climate change and in pretty short order sustainable transportation, with Brownlee bringing up Tesla and asking if, when "premium" elect

Elon Musk is not one to mince words, but he may have just lost a potential customer because of a cutting tweet. Gates and Brownlee have met before, and the idea was to have Gates discuss some of what the Bill & Melinda Gates Foundation has planned for this year, which marks the 20th anniversary of the organization. Unsurprisingly, the conversation touched on climate change and in pretty short order sustainable transportation, with Brownlee bringing up Tesla and asking if, when "premium" elect

Continue reading Bill Gates compliments Tesla; Elon Musk does not return the favor

Bill Gates compliments Tesla; Elon Musk does not return the favor originally appeared on Autoblog on Wed, 19 Feb 2020 08:44:00 EST. Please see our terms for use of feeds.

Permalink | Email this | CommentsFiled under: Celebrities,Motorsports

Dale Earnhardt Jr. spent decades taking risks on the track and in the air. Earnhardt said Sunday before the Daytona 500 that he’s changed his approach to flying following a harrowing crash landing near Bristol Motor Speedway last August. Earnhardt, his wife Amy, daughter Isla, dog and two pilots escaped the fiery jet in east Tennessee.

Dale Earnhardt Jr. spent decades taking risks on the track and in the air. Earnhardt said Sunday before the Daytona 500 that he’s changed his approach to flying following a harrowing crash landing near Bristol Motor Speedway last August. Earnhardt, his wife Amy, daughter Isla, dog and two pilots escaped the fiery jet in east Tennessee.

Continue reading Dale Jr. dives into the details to get over fear of flying after jet crash

Dale Jr. dives into the details to get over fear of flying after jet crash originally appeared on Autoblog on Mon, 17 Feb 2020 18:16:00 EST. Please see our terms for use of feeds.

Permalink | Email this | Comments